In this blog post, Brian Henderson describes the trajectory of his hearing loss and how this has affected his experiences of playing the organ over time.

“I am a 70 year old church organist, now with moderate hearing loss in both ears. I have played the organ from the age of 18. Other relevant personal information includes a career of Physics teaching up to A level and a 10-year spell of helping a local organ builder after retiring from full time teaching.

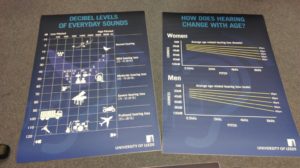

“My first experience of hearing loss was sudden and traumatic. I was making a mobile phone call in 2011 in a busy shopping street and put the phone firmly to my ear to hear it answered. At that exact instant someone called me and the phone rang while against my ear. My head seemed to explode. Luckily I was with family members who helped me to a seat and half an hour later I felt able to move on, but with the realisation that hearing in my left ear was damaged. A visit to my GP the next day brought the news that my hearing might or might not recover. It didn’t. Hospital ENT consultation and an MRI scan followed but produced no answers, and an NHS hearing aid was soon supplied. The loss was worst from 1kHz upwards, so consonants were missing from speech and the organ upperwork (the higher pitched stops) lost from my left ear. But I still had a good right ear and I thought life was still mostly fine in spite of the chance in a million that had deafened me.

“I used the aid for conversation, but I took it out for playing as it distorted the organ sounds badly. I read that one of the consequences of sudden hearing loss could be hyperacusis, increased sensitivity to some sounds. This explained why organ notes in the tenor octave were now sounding thick and unpleasant, with tenor A and B booming out from what had been a well-regulated quiet flute stop. After a year or so I realised I was not hearing this. This was my first taste of the ability of the brain to gradually improve an initially troubling situation but little did I know that I would come to rely on this property of the brain to help me a few years on down the line.

“In mid 2015 I became aware that my right ear was not hearing as well as before. It showed up most on the organ where I could no longer hear the highest notes of a 2 foot stop (a stop which plays 2 octaves above piano pitch) and I realised that sounds around 6 kHz and above were gone. There was also a strange blocked feeling in my right ear, with intermittent popping, and I was aware that this right deafness did not feel the same as the left deafness. A GP investigation started, I tried a nasal spray and inhalation. I used olive oil and later sodium bicarbonate solution but the blocked feeling persisted even though the ear drum was visible. I had an audiogram and a right aid was supplied for what was then described as slight deafness.

“The GP investigation into the blocked feeling continued (now 6 months after it started) and in February 2016 microsuction was performed to remove the small amount of wax that was visible. Initially all seemed well, but 3 hours later I realised my right hearing had gone the same way as my left. Organ experimentation showed a fall off at 2KHz, not quite as bad as the left but bad enough to make the organ sound dreadful. The hearing loss was described now as moderate in both ears and I found conversation difficult and TV listening often unintelligible. My life seemed to collapse around me. To lose the left hearing had been an accident, but the right deafness seemed the direct result of a GP procedure. I felt bitter and defeated. And my greatest relaxation and my defining role – as church organist – was lost.

“There were two separate but overlapping strands to my life with hearing loss. One was searching for advice about hearing loss and music. The other was an NHS investigation into my sudden right hearing loss. This investigation took the form of two hospital ENT consultations, several audiograms and an MRI scan. The noisy MRI machine accentuated the hyperacusis now present in the right ear but revealed no reasons for my problems. The three audiograms were wildly inconsistent, one even showing normal hearing in the right ear, possibly because my tinnitus and hyperacusis were masking the true situation. This was a time of fear and frustration until in May I was finally passed on to a wonderful senior audiologist who listened intently to my descriptions. I could tell from the way she conducted my hearing test (with quick repetitions and surprising frequency jumps) that she was using her considerable experience to “catch me out”. I was delighted that she produced an audiogram that matched the view I had gleaned from listening note by note on the organ. The aids were reprogrammed and at least speech in a quiet space became easily intelligible. Furthermore the senior audiologist understood the importance of music in my life and ordered for me a pair of Phonak Nathos S+ MW aids which she said had better musical capabilities than the standard NHS aids.

“I felt I was making real progress now with audiology, but frustration soon set in with delay in the delivery of the aids, the substitution by management of a locum at one appointment resulting in a mis-setting of the aids, and repeated difficulties in ensuring that future appointments were made with the senior audiologist who had rescued me (and actually asked that all appointments be made with her). There is a real personal difficulty here – does one complain and risk alienating the organisation that is trying to help? In the end I have been quietly persistent and have eventually seen the person I need, but the missed opportunities and time lost have led to a roller coaster of hopes and disappointments lasting over 6 months.

“On the musical side things have at least been more under my control. When the right hearing loss occurred the unpleasant sound of the organ made it impossible to continue playing for services. With hearing aids (even the later Phonak pair described above) the distortion was more than I could bear, and I tried playing with no aids. Quiet music on 8’ flutes1 was similar to what I remembered. Louder music on 8’ and 4’ diapasons2 sounded thick and muddy, and adding further upperwork (2’stops and mixtures) was simply frustrating because there was no change. All the majesty of the brighter sounds was lost, but I persisted in playing to myself frequently and for short times using only the quiet foundation stops. Over a period of time my musical memory and the adaptability of the brain enabled me to hear (or imagine) brighter sounds as the higher pitched stops were added. Separately these stops were almost inaudible, and different notes had no discernible pitch difference. But in the chorus there was an unmistakable element of brightness that enabled me to get some enjoyment from my playing and even contemplate returning to service playing a month or so after the sudden loss. At this time I was still taking my aids out as I approached the organ, so my initial service playing showed up the inevitable problem – I did not know what was going on in the service. I sometimes only knew when to play a hymn after a gesture from my wife in the front row of the congregation!

“This situation could not continue. I persisted without aids but with the help of a small loudspeaker placed as close to my ear as possible. It was driven from the church microphone system using its own amplifier with bass turned down and treble up to max. In this way I played for some services although most were covered by pianists in the church doing a good job on the organ in spite of their initial fears.

“As time went on I got more used to the various programmes in the aids, and began to play to myself with aids in, music programme selected, volume set almost to lowest. The distortions were many and varied. All sounds above 500 Hz had a strange edge to them. Soft flutes (with an almost pure sine waveform) had a curious repetitive hiccup caused I believe by the digital signal processing. Rapid passages of music did not sound too bad, as individual notes did not last long enough for the distortion to offend, but slow sustained notes were horrid. It was almost impossible to balance a solo stop with a suitable accompaniment on a different manual. For instance an oboe stop did not sound as it used to because so much of its energy is in the upper harmonics. The accompanying flute stop will have much of its energy within the fundamental, and the differing amplification of high and low frequencies, intended to correct my hearing, is not done precisely enough to judge the balance between a distorted oboe and a distorted flute. Much of my playing is done by remembering combinations that used to work, but when I try a new piece (or harder still a different organ) it is almost impossible to judge whether I am producing reasonable sounds.

“There are some other aspects that make practising harder work than before. Hyperacusis presents itself in odd and initially unsettling ways. Treble F on a stopped flute is hugely louder than its neighbouring notes. It actually shouts out and unbalances any chord containing it. The same note played on a stop with a differing harmonic make up, such as a diapason rank, or an open flute, fits perfectly with its neighbouring notes. Pitch discrimination has suffered. Any given note sounds slightly sharper in one ear than in the other! Chords which contain close harmonies can now set up a beating effect, presumably because of this discrepancy. So all practice is now punctuated by repeated checks of strange out of tune sounds. They are often caused by wrong notes, but they are equally often caused by my wrong ears. In some cases repeated playing of a nasty sounding chord has taught my brain to accept it, and I can even return to a piece several weeks later and find that the chord I battled with and beat into submission has stayed reasonable.

“I do not expect the lost hearing to magically return, but I do hope that somehow in the future I will find better settings for the existing aids, or perhaps better aids, that might help me hear more of the organ as it really sounds. The present problems are still considerable. So should I have given in and stopped playing? My answer is an emphatic no. I am back to playing for about 3 services a month. I have dispensed with my local treble enhanced loudspeaker. I use my aids on the music setting and have found a volume setting which is reasonably appropriate for organ sounds and much of the spoken word, and clergy have helped by giving clear announcement of hymns. I do get satisfaction from playing the right notes in the right order, even if the practice has taken longer and even though the sound of the instrument has lost a lot of beauty and majesty. I can still get my excitement from a loud conclusion with several ranks of mixture and pedal reeds. And above all I once again get a buzz from leading a congregation which sings with enthusiasm and sensitivity as I play.

“And of course there is more to musical life than playing the organ. I enjoy singing (although sometimes with difficulty) in a community choir. Pitching notes is far more uncertain than it used to be, and the trick of checking by putting a finger in an ear is not possible with a hearing aid in the way! I nearly stopped attending concerts in Birmingham Symphony Hall after a couple of disappointments, but then experimented with different seating positions. Concerts have once more become enjoyable provided I pay for the best seats in the house. Now that I can see the full orchestra clearly I find that I can hear and recognise individual instruments much better; another example of the brain’s remarkable ability to adapt and improve distressing situations.”

- the organist’s term 8’ means organ pipes at piano pitch (ie middle C key plays a middle C sound)

- 4’ refers to pipes sounding one octave above piano pitch. Diapasons are the family of open metal pipes which give the basic organ tone, and they have more harmonic development than organ flutes.

Brian Henderson

Bromsgrove

March 2017